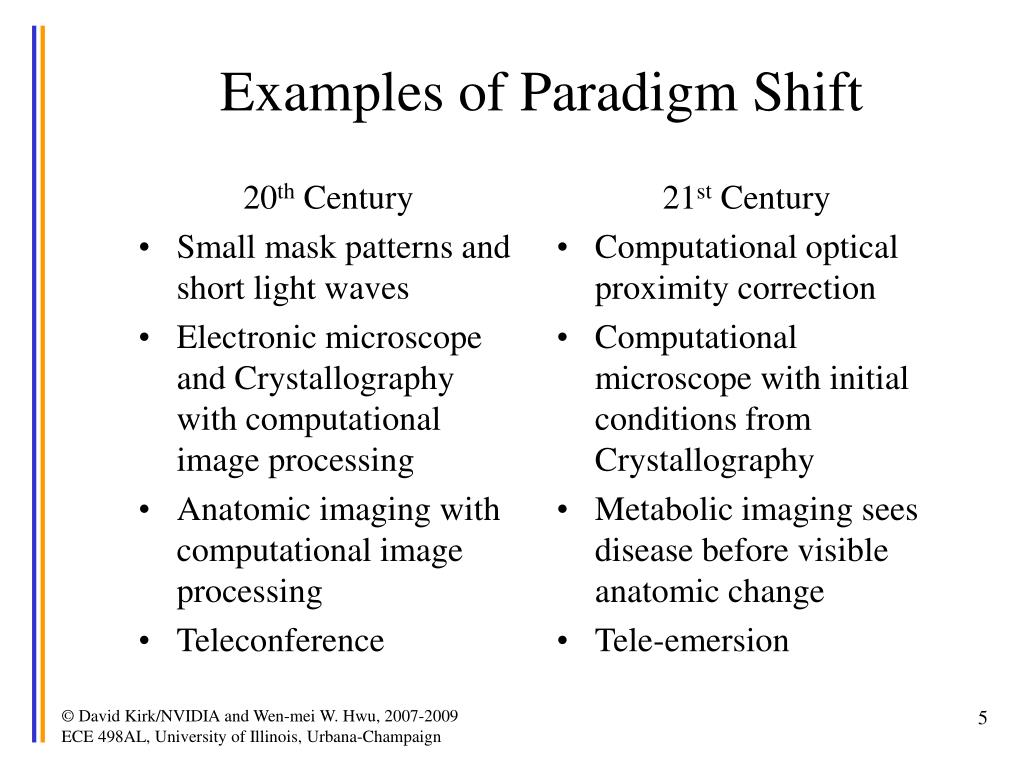

What we often miss is that paradigms shift and the challenges-and opportunities-of the future are likely to be vastly different. When progress is powered by chip performance and the increased capabilities of computer software, we tend to judge the future by those same standards. It is all too easy to get caught up in old paradigms. Digital technology is beginning to power new areas in the physical world, such as genomics, nanotechnology and robotics, that are already having a profound impact on such high potential fields as renewable technology, medical research and logistics. Still, there are indications that the future will look very different than the past. We see, to paraphrase Robert Solow, digital technology just about everywhere except in the productivity statistics. While there may be short spurts of growth, like there was in the late 90’s, we’re not likely to see a sustained period of progress anytime soon.Īmong the reasons he gives is that, while earlier innovations such as electricity and the internal combustion engine had broad implications, the impact of digital technology has been fairly narrow. In The Rise and Fall of American Growth, economist Robert Gordon argues that the rapid productivity growth the US experienced from 1920-1970 is largely a thing of the past. While in the 20th century, firms could achieve competitive advantage by optimizing their value chains, the future belongs to those who can widen and deepen connections. The rise of platforms makes it imperative that managers learn to think differently about their businesses. IBM has learned to embrace open technology platforms, because they give it access to capabilities far beyond it own engineers. The App Store connects ecosystems of developers to ecosystems of end users. Amazon’s platform connects ecosystems of retailers to ecosystems of consumers. Platforms are important because they allow us to access ecosystems. And what would Apple’s iPhone be without the App Store, where so much of its functionality comes from? Amazon earns the bulk of its profits from third party sellers, Amazon Prime and cloud computing, all of which are platforms. Yet look at successful companies today and they make their money off of platforms.

#Paradigm shift example Pc#

Those first successes could then lead to follow ups-like the PC and the Macintosh-and lead to further dominance. If you look at the great companies of the last century, they often rode to prominence on the back of a single great product, like IBM’s System/360, the Apple II or Sony’s Walkman. It used to be that firms looked to launch hit products. Soon, when we choose to use a specific application, our devices will automatically be switched to the architecture-often, but not always, made available through the cloud-that can run it best. New FPGA chips can be optimized for other applications. Quantum computers, which IBM has recently made available in the cloud, work far better for security applications.

Neuromorphic chips, based on the brain itself, will be thousands of times more efficient than conventional chips. So the emphasis is moving from developing new applications to developing new architectures that can handle them better. So far, that’s worked well enough, but for the things that we’ve begun asking computers to do, like power self-driving cars, the von Neumann bottleneck is proving to be a major constraint. Till now, all of these applications have taken place on von Neumann machines-devices with a central processing unit paired with data and applications stored in a separate place. In essence, the modern world is little more than the applications that make it possible. The Internet led to email, e-commerce and, eventually, mobile computing. Later innovations, like graphic displays, word processors and spreadsheets, set the stage for personal computers to be widely deployed. That, in turn, dramatically changed how organizations could be managed. For example, after relational databases were developed in 1970, it became possible to store and retrieve massive amounts of information quickly and easily. Since the 1960’s, when Moore wrote his article, the ever expanding power of computers made new applications possible. This is harder than it sounds, because entirely new chip designs have to be devised, but it could increase speeds significantly and allow progress to continue. One approach, called 3D stacking, would simply combine integrated circuits into a single three dimensional chip. So we have to shift our approach from the chip to the system.

0 kommentar(er)

0 kommentar(er)